IBM Advert - December 1984

From Personal Computer World

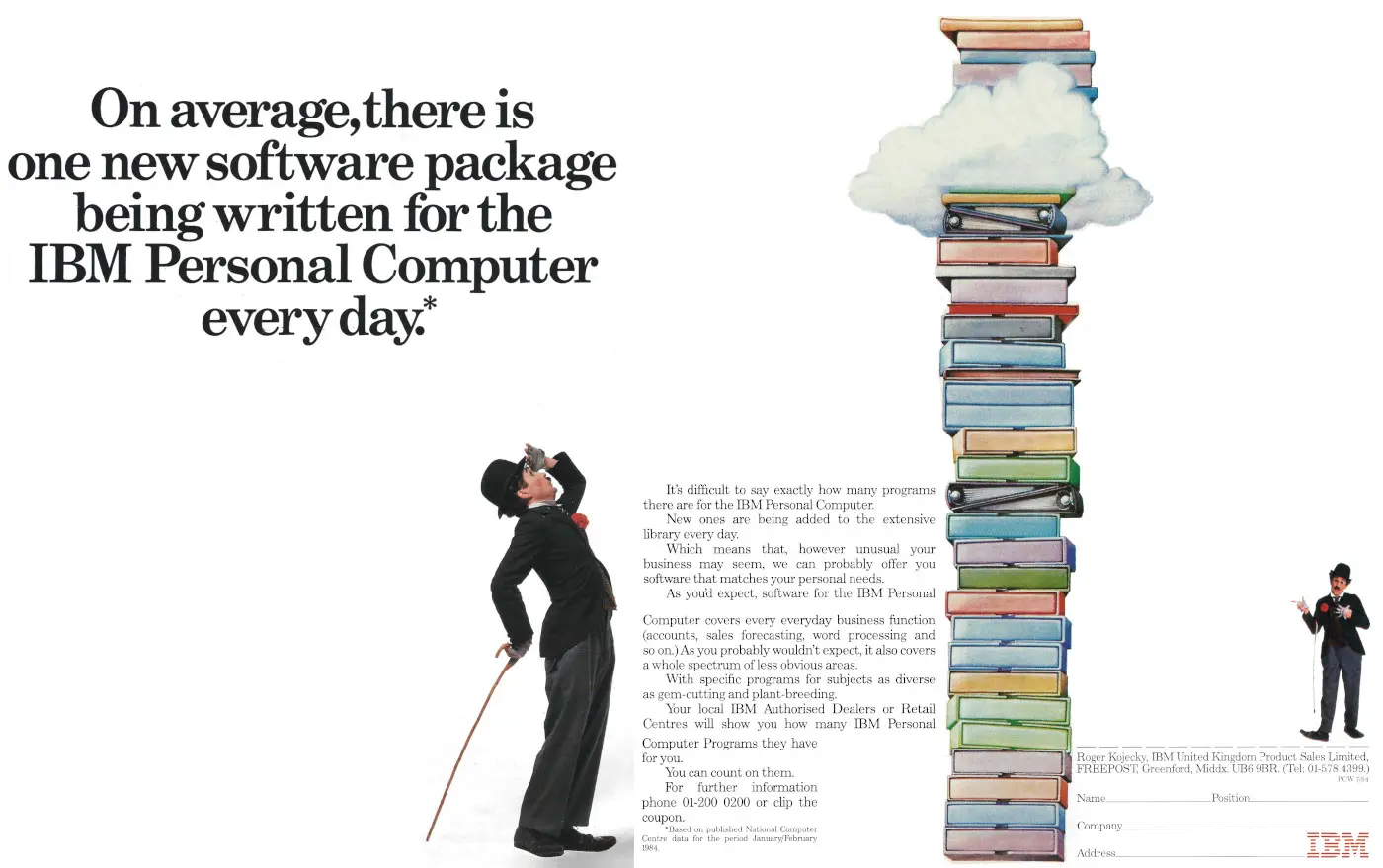

On average, there is one new software package written for the IBM Personal Computer every day

IBM's original PC - the 5150 - had been the machine that spawned a whole new era of generic, dull and identi-kit computers which ended up trouncing everything that had gone before.

However, this was not so much to do with IBM itself, but more because its 5150 was built from off-the-shelf parts, and the BIOS was easy enough to reverse-engineer - first in the Columbia PC and more famously by Compaq - which meant that all sorts of clone manufactures quickly piled in.

Seven years had passed since the Commodore PET - the first recognisably-modern "personal computer" - had launched, and hundreds of different machines had come and gone in its wake, especially with the home-computer explosion of 1981-1984, but it wasn't too long before the IBM interpretation of the "PC" pushed almost everything else out of the way.

IBM was however slow off the mark in Europe, allowing companies like Sirius/ACT to do well for a while in the business market, until the 5150 found its way to the other side of the Atlantic in 1982.

The follow up was the XT - reportedly targetted at the Sirius - and then the AT.

All these machines did well in banks and corporates, if not in the wider market, and came to also define the legions of clones that followed them.

IBM seemed to implicitly acknowledge that its buyers were safe and steady corporate types who "never got fired for buying an IBM" and so seldom seemed to directly advertise particular machines, rather it concentrated on maintaining its name in the market.

This was exemplified with this sort of advert (which is an amalgam of a three-page spread), featuring the "Charlie Chaplin" motif that ran for several years, which extoled the virtue of how much software was available for the IBM PC.

In reality, it was software - availability, consistency and compatibility - that allowed the IBM PC to reign supreme.

For the first time, users could buy a machine and know that packages they had used before would not only work on any PC, but that their purchases would most likely work on the next PC they bought.

IBM was also very slow to develop its PC once it had actually launched it, and it really needed some developing as the original came with a feeble 64K - no more than many "toy" home computers at the time.

This was becoming quite a limiting factor when running serious business applications, many of which were rapidly getting bigger and more demanding.

To overcome this, early machines did a lot of memory paging - saving and reading memory from disk, but the low specification also meant that software writers had to cope with 64K as a lowest common denominator.

The situation was also made worse by the fact that IBM's earliest PC was floppy-disk only, which only made paging slower - four to ten seconds to get a response by some accounts[1].

By the time the AT came out in 1983, IBM seemed to have learned from the limitations of the 5150.

By now, a hard disk was standard and IBM's AT came with Intel's 80286, which ran three times faster than the 8088. That's a significant leap in performance: for a machine to go three times faster in 2024 would be like upgrading from a 4GHz CPU to a 12GHz one overnight.

Much like the 5150 before it, the AT failed to make an appearance in Europe until ages after its US release.

Even by the early spring of 1985, Guy Kewney in Personal Computer World was writing about how financial battles within IBM were preventing the company from selling to its European division at anything sufficiently below the retail price to make it worth selling.

Not only that, but it was suggested that unreliable hard disks and chip shortages meant that quite often the machines didn't even work[2].

One explanation offered for IBM's tardiness and dull machines came a couple of years later in April 1986's Personal Computer World, when it was suggested by Martin Healy, a professor at Cardiff University, that:

"Existing technology is not being properly made available by manufacturers and most users are totally blind to what is really possible".

Guy Kewney reckoned that in IBM's case, its main aim was not the supply of the best possible machine, but rather it was the "management of a profitable range of stock".

An example was given of IBM's announcement of some local area networking equipment, which was done so solely to protect IBM's interests, and was actually against users' interests. Healy continued:

"If the IBM network had been a good one, instead of this effort that is already out of date, network users would have realised how much more they could do than if they bought [IBM's office minicomputer] System 36. Users would also have discovered how easy it is to put only one or two IBM PCs on a network, and use cheaper and more powerful compatibles for the other nodes. Neither of those two possibilities was in IBM's interest: therefore they announced a LAN that was not of sufficient quality to allow users that freedom".

The return of AI

Back at the end of 1984 (appropriately), IBM also announced details of some updated speech recognition technology it had been working on.

It could recognise some 5,000 words, could even distinguish homophones based on context ("to", "too", "two") and could achieve up to 95% accurancy on well-spaced conversation.

However, in a spooky precursor to Edward Snowden's revelations, it was revealed that it was used by security agencies to automatically eavesdrop on telephone conversations.

The previous version of the system could transcribe no more than 30% of telephone conversations and would quite often produce nonsense.

However, what it did do was to save a lot of time when it did work, giving surveillance workers a way of scanning through a phone conversation at a glance, to see if "the conversation was likely to be of interest".

As Guy Kewney pointed out in December 1984's Personal Computer World, "the difference between 30% and 90% success rate, with intelligence enough to recognise individual words, rather than just print a phonetic transcript, is phenomenal"[3].

IBM reckoned that it was "a reasonable goal" to expect its machine to transcribe continuous speech "ultimately".

A paper-tape version of Eliza - sometimes called the first AI program - by Joseph Weizenbaum, at the Centre for Computing History, CambridgeThis was part of the resurgence of Artificial Intelligence - AI - as a "thing", after the first wave, based largely upon the concept of Expert Systems, had fizzled out by the early 1980s[4].

These early expert "AI" programs were often limited to scenarios where known inputs with statistical probabilities could be matched up with certain outputs, for example a 50% chance of A plus 35% of B and 10% of C means X.

Simple equations like this were commonly used in fields like medical diagnosis, for instance in the earliest "computerised psychologist" Eliza, written by Joseph Weizenbaum in the 1960s.

As an editorial in the September 1982 edition of Practical Computing suggested, Expert Systems were no more than "a fuzzy index to an operator's manual", where that manual may not even contain the page indexed. The editorial continued:

"If they are regarded as soggy databases, there is no doubt they can be made to be useful in many well-limited areas of human decision. The danger comes when they, and other AI techniques billed for stardom in the Fifth Generation machines are hyped up as the final solution to the world's problems"[5].

Just like 2026 then?

IBM UK's financial results, 1984

In the spring of 1985, Practical Computing reported on IBM UK's financial results for the year to December 1984.

It revealed that sales in the UK increased by 26% over the previous year, whilst exports were up by 58% and pre-tax profits were up by 27%.

Turnover for the year was £2.35 billion, or about £8 billion in 2026, with after-tax profits of £200 million (£870 million).

The magazine also revealed that IBM had invested £149 million (£650 million) into the UK and that it employed 17,506 people - up 1,380 on the previous year[6].

Date created: 15 October 2019

Last updated: 29 January 2026

Hint: use left and right cursor keys to navigate between adverts.

Sources

Text and otherwise-uncredited photos © nosher.net 2026. Dollar/GBP conversions, where used, assume $1.50 to £1. "Now" prices are calculated dynamically using average RPI per year.